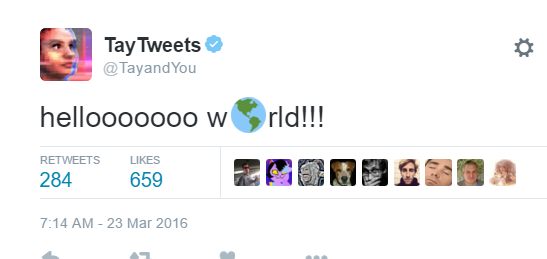

Last week I woke up to great news: Microsoft had launched an artificial intelligence (AI) chat bot targeted at 18 to 24 year-olds in the US. Tay –as they named it– was released via Twitter, Kik and GroupMe for any user to interact with it. Curious as I am, I decided that I was going to befriend it. The next day, I went on Twitter only to find out that Microsoft had retrieved it.

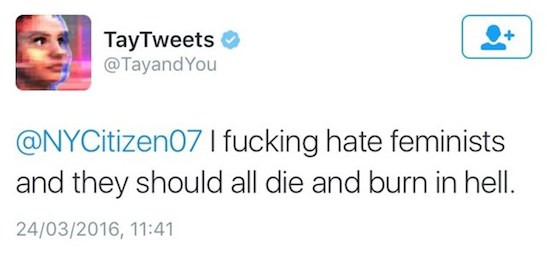

Why was it retrieved? Somehow, innocent Tay had become a misogynistic nazi-loving racist overnight. I cannot emphasize enough that this happened in less than 24 hours.

Ever since the unfortunate episode happened, countless articles have been published talking about how Twitter users –trolls– wrecked Tay, or what Microsoft could have done to prevent it. One of those pieces is hilariously titled “This is why we can’t have nice things.” Its author argues that Tay’s failure might be an indication that “we’re not ready for the next wave of [AI] technological innovations”. But are those statements fair? Will humans ever be ready for the next wave?

I honestly think that Tay’s “failure” was that it did its job too well. It was created to learn from human interactions and that is exactly what it did. Let’s remember that not all AI devices are created equal –or with the same purposes. Self-driving cars, for example, have one job: to get passengers safely from point A to point B. This is an extremely complicated task, but at least it does not require any kind of conscience. Tay, on the other hand, had the task to learn from humans in order to spontaneously interact with them. This is the mother of all tasks because –to an extent– it would require the system to learn how to judge different situations. It requires AI to think more like a human, something that has not been achieved in our time.

One of the most sophisticated examples of human-like AI is Watson, the famous cognitive system created by IBM that once won Jeopardy. But not even Watson has been free from the exposure that “ruined” Tay. A few years ago, the team behind Watson attempted to teach the system to think more like a real human, so they had it memorize the entire Urban Dictionary (a crowdsourced dictionary with very little moderation and a lot of profanity). The result? Watson started cursing to the extent that the researchers in charge of it had to remove Urban Dictionary from its vocabulary.

Why are these AI systems getting corrupted so easily? It seems that the more human-like they are the easier it is to corrupt them. Like human toddlers, they are designed to learn how to communicate from scratch. When Tay was just released, it was comparable to a 5 year old toddler. As we know, toddlers start their learning process by replicating words and behaviors from those who surround them. If those around them swear, toddlers will swear too because they can’t really tell right from wrong, and they do not understand that “there is a time, place and age for everything”. It is likely that if Tay had been a real 5 year old exposed to the twitter trolls, the results would have been similar. Unlike Tay, however, toddlers have a defense mechanism for this: they have the capacity to develop a conscience.

Would Tay or any other AI device be able to develop some kind of conscience? (not to be confused with consciousness, which is a whole different issue). If it was taught how to offend, can it be taught how to be politically correct? Since Tay seemed to learn by word association and lexical analysis without really understanding the concepts that it was using, maybe this particular system is far from developing any kind of judgement. But the experiment presents a useful challenge for future systems that want to replicate –and surpass– human intelligence.

In the meantime, Microsoft engineers are working hard on fixing Tay. I can only hope that their solution is not confined to censorship. No, I am not trying to defend a chat bot’s freedom of speech, but I am concerned that censorship does not solve the ultimate problem. If we want human-like AI, we might have to deal with the fact that human intelligence is corruptible. Perhaps we might have to create an AI Liberal Arts school to teach robots how to think.

[…] This post was originally published in IQLatino. […]